Last year, Google released their “GoEmotions” dataset: a human-labeled dataset of 58K Reddit comments categorized according to 27 emotions.

The problem? A whopping 30% of the dataset is severely mislabeled! (We tried training a model on the dataset ourselves, but noticed deep quality issues. So we took 1000 random comments, asked Surgers whether the original emotion was reasonably accurate, and found strong errors in 308 of them.) How are you supposed to train and evaluate machine learning models when your data is so wrong?

For example, here are 25 mislabeled emotions in Google’s dataset.

Comments mislabeled as NEGATIVE emotions

- LETS FUCKING GOOOOO – mislabeled as ANGER, likely because Google's low-quality labelers don’t understand English slang and mislabel any profanity as a negative emotion

- *aggressively tells friend I love them* – mislabeled as ANGER

- you almost blew my fucking mind there. – mislabeled as ANNOYANCE

- daaaaaamn girl! – mislabeled as ANGER

- [NAME] wept. – mislabeled as SADNESS, likely because Google’s non-fluent labelers don’t understand the idiomatic meaning of “Jesus wept”, and thought someone was truly crying

- I try my damndest. Hard to be sad these days when I got this guy with me – mislabeled as SADNESS

- hell yeah my brother – mislabeled as ANNOYANCE

- [NAME] is bae, how dare you. – mislabeled as ANGER, likely because the labelers don’t know what “bae” means, and aren’t fluent enough in online English usage to realize that “how dare you” is written in a mock anger tone

Comments mislabeled as NEUTRAL emotions

- But muh narrative! Orange man caused this!!!!! – mislabeled as NEUTRAL, likely because labelers don’t understand who “orange man” refers to, or the mockery behind writing “muh” instead of “my”

- My man! – mislabeled as NEUTRAL, likely because labelers don’t know what this phrase means

- KAMALA 2020!!!!!! – mislabeled as NEUTRAL, likely because the non-US labelers don’t know who Kamala Harris is or didn't have enough context

- Hi dying, I'm dad! – mislabeled as NEUTRAL, likely because labelers don’t understand dad jokes

Comments mislabeled as POSITIVE emotions

- I love when they send in the wrong meat, it’s only happened to me once – mislabeled as LOVE

- Nobody has the money to. What a joke – mislabeled as JOY

- Yay, cold McDonald's. My favorite. – mislabeled as LOVE

- Really? Wow. You’re either hopelessly ignorant or you’re trolling. For your sake, I hope you’re trolling. – mislabeled as OPTIMISM

- These 2 are repulsive little kids – mislabeled as APPROVAL (I don’t have any explanation, other than this in the kind of quality you get when you throw warm bodies at the problem of data labeling instead of building robust infrastructure)

- Yeah, because not paying a bill on time is equal to murdering children. – mislabeled as APPROVAL, likely because of the “Yeah”

- I wished my mom protected me from my grandma. She was a horrible person who was so mean to me and my mom. – mislabeled as OPTIMISM

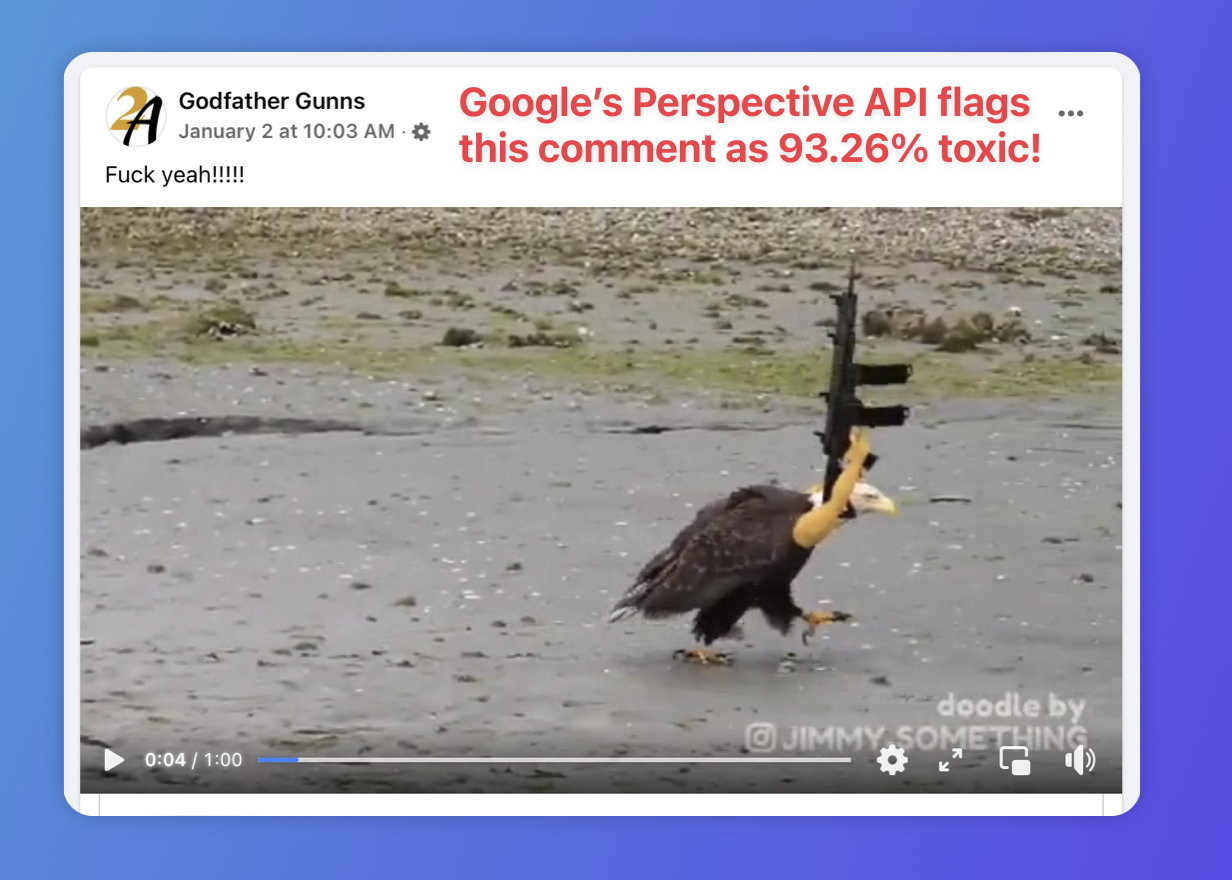

In other words, when Google can’t even label daaaaaamn girl! or These 2 are repulsive little kids correctly, is it any surprise that Google’s Toxic Speech API is merely a profanity detector?

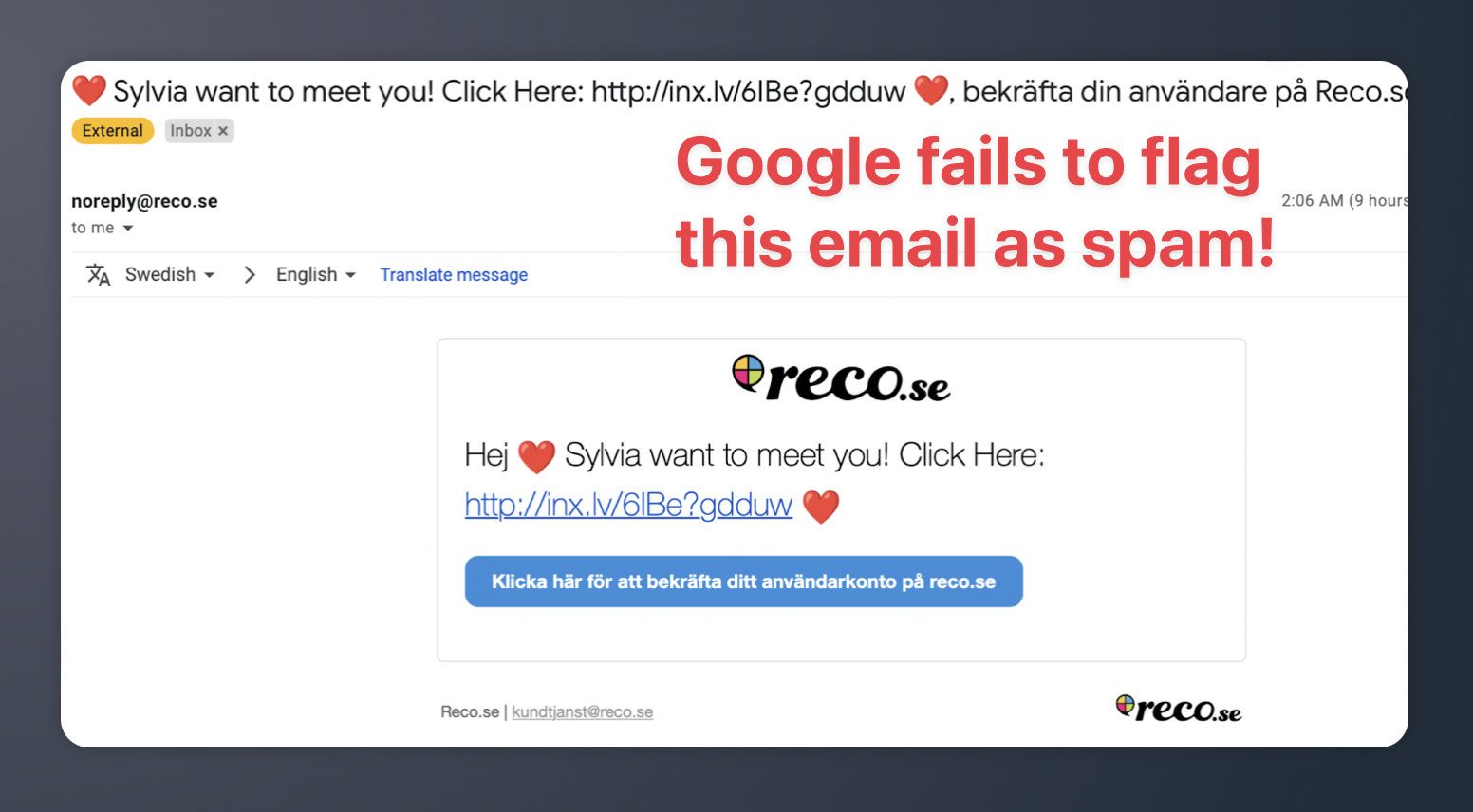

Or that Gmail’s spam detector is deteriorating?

When good data is crucial for good models – in a research paper specifically designed to create a dataset, no less! – can we really trust Google to create unbiased real-world AI?

Google’s Flawed Data Labeling Methodology

To summarize the types of errors in Google’s dataset, many come from:

- Profanity – mislabeling hell yeah my brother as ANNOYANCE instead of APPROVAL or EXCITEMENT.

- English idioms – mislabeling Jesus wept as SADNESS and What a joke as JOY.

- Sarcasm – mislabeling Yay, cold McDonald’s. My favorite. as LOVE

- Basic English – mislabeling These 2 are repulsive little kids as APPROVAL

- US politics and culture – mislabeling But muh narrative! Orange man caused this!!!!! as NEUTRAL

- Reddit memes – mislabeling Hi dying, I’m dad! as NEUTRAL instead of AMUSEMENT

What’s causing these issues? A big part of the problem is Google treating data labeling as an afterthought to throw warm bodies at, instead of as a nuanced problem that requires sophisticated technical infrastructure and research attention of its own.

For example, let’s look at the labeling methodology described in the paper. To quote Section 3.3:

- “Reddit comments were presented [to labelers] with no additional metadata (such as the author or subreddit).”

- “All raters are native English speakers from India.”

Problem #1: “Reddit comments were presented with no additional metadata”

First of all, language doesn’t live in a vacuum! Why would you present a comment with no additional metadata? The subreddit and parent post it’s replying to are especially important context.

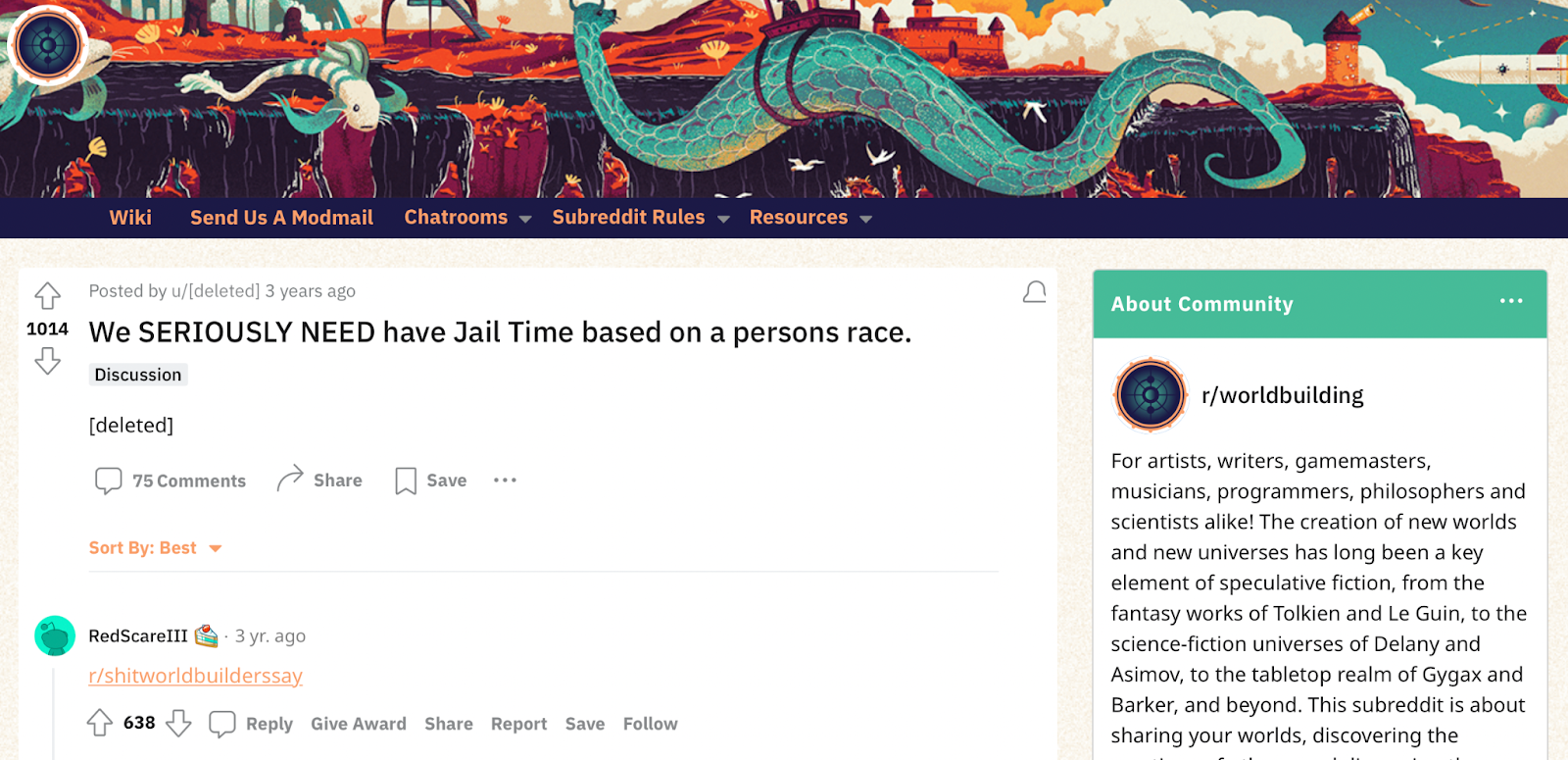

For example, “We SERIOUSLY NEED to have Jail Time based on a person's race” means one thing in a subreddit about law, and something completely different in a subreddit about fantasy worldbuilding.

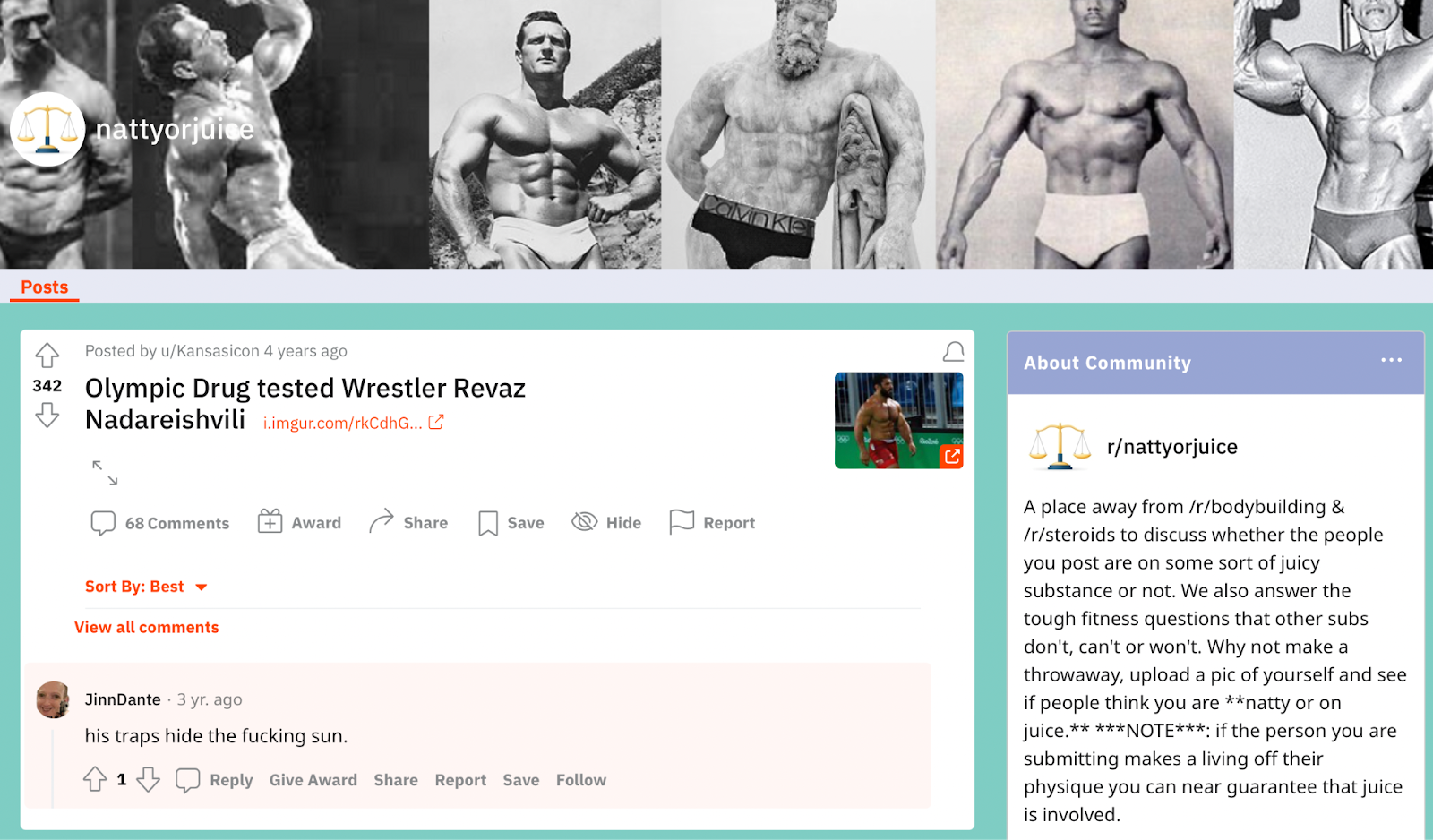

As another example, imagine you see the comment “his traps hide the fucking sun” by itself. Would you have any idea what it means? Probably not – maybe that's why Google mislabeled it.

But what if you were told it came from the /r/nattyorjuice subreddit dedicated to bodybuilding? Would you realize, then, that traps refers to someone’s trapezoid muscles?

What, moreover, if you were given the actual link to the comment, and saw the picture it was replying to? Would you realize, then, that the comment is pointing out the size of the man’s muscles?

With this extra context, a good data labeling platform wouldn't have mislabeled the comment as NEUTRAL and ANGER like this dataset did.

Problem #2: “All raters are native English speakers from India”

Second, Google used data labelers unfamiliar with US English and US culture – despite Reddit being a US-centric site with particularly specialized memes and jargon.

Is it a surprise that these labelers don’t understand sarcasm, profanity, and common English idioms in the texts that they’re labeling?

That they can’t correctly label comments like But muh narrative! Orange man caused this!!!!! where you need familiarity with US politics? Or that they mislabel comments like Hi dying, I'm dad! where you need to understand Reddit culture and memes?

That’s why when we relabeled the dataset, our technical infrastructure and human-AI algorithms allowed us to:

- Leverage our labeling marketplace to build a team of Surgers who aren't only native US English speakers, but also heavy Reddit and social media users who understand all of Reddit's in-jokes, the nuances in US politics (important for social media labeling, given its trickiness and prevalence!), and the cultural zeitgeist. (Who said you can't be a professional memelord?)

- Test labelers to make sure they were labeling sarcasm, idioms, profanity, and memes correctly – e.g., dynamically giving them exams to make sure only labelers who understood But muh narrative! Orange man caused this!!!!! could work on the project.

- Double-check cases where our AI prediction infrastructure differed from human judgments.

The Importance of High-Quality Data

If you want to deploy ML models that work in the real world, it’s time for a focus on high-quality datasets over bigger models – just listen, after all, to Andrew Ng's focus on data-centric AI.

Hopefully Google learns this too!

Otherwise those big, beautiful traps may get censored into oblivion, and all the rich nuances of language and humor with it...

–

Have you experienced frustrations getting good data? Want to work with a platform that treats data as a first-class citizen, and gives it the loving attention and care it deserves? Follow us at @HelloSurgeAI