“The team at Surge AI understands the unique challenges of training large language models and AI systems. Their human data labeling platform is tailored to provide the unique, high-quality feedback needed for cutting-edge AI work. Surge AI is an excellent partner to us in supporting our technical AI alignment research." — Jared Kaplan, Anthropic Co-Founder

Overview: Anthropic is One of the World’s Leading AI and LLM Companies

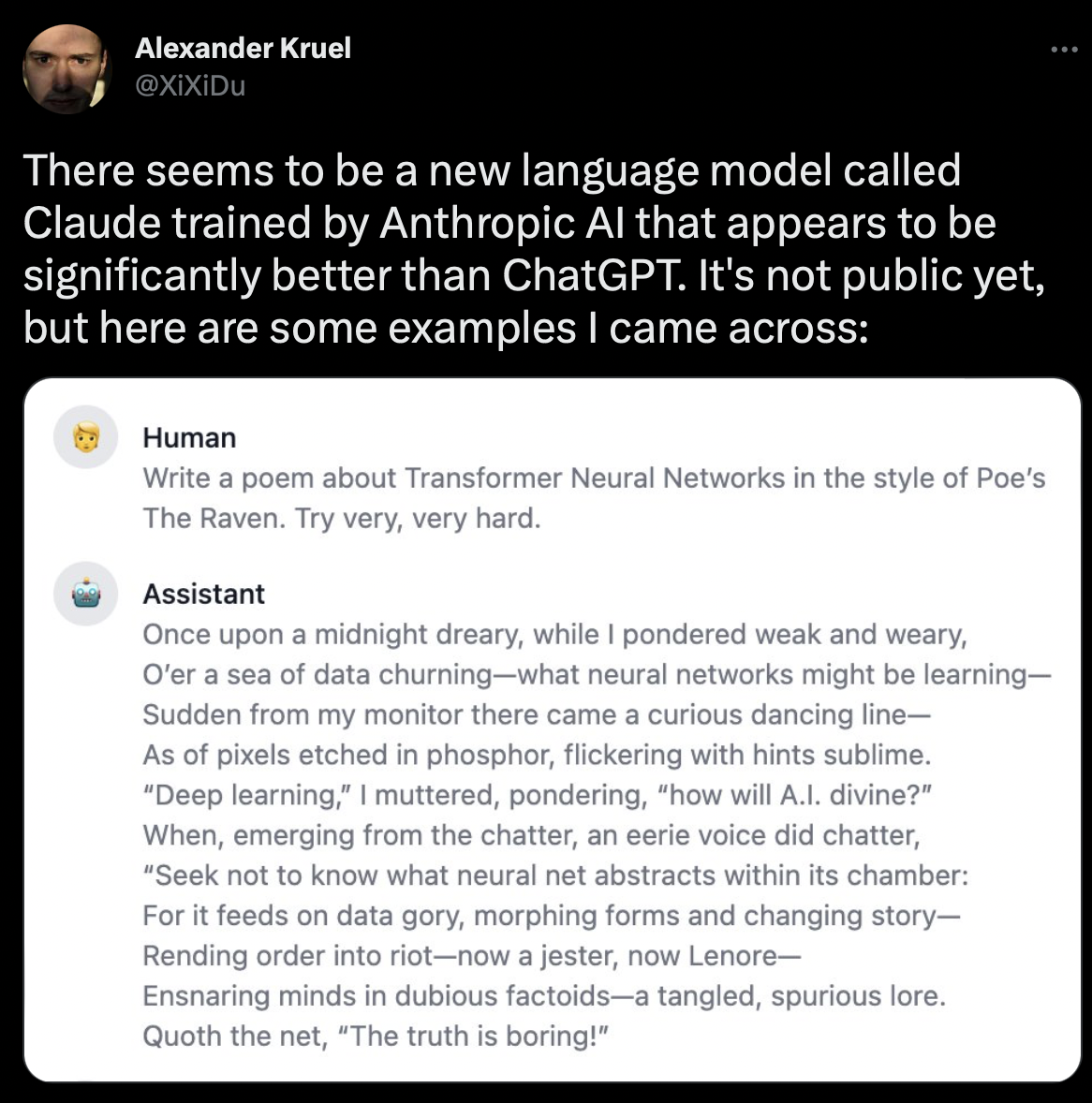

Anthropic is one of the world’s leading AI companies, building safe, state-of-the-art large language models and AI systems. Founded by a team of former OpenAI and Google Brain researchers, their AI Assistant, Claude, is already one of the safest, most capable LLMs on the planet – even surpassing OpenAI’s ChatGPT in a multitude of domains.

The Problem: Building Trustworthy, High-Quality Human Feedback

Researching and leveraging human data scaling laws – the power of human feedback for making AI systems more safe and useful – has been a key area for Anthropic from the start. Their research on Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback has been one of the most important advances in the field, exploring how to train a general language assistant to be helpful without providing harmful advice or exhibiting bad behaviors.

However, building the data pipeline needed to gather high-quality human feedback at scale was challenging. Finding people with the skills needed to annotate and label language model outputs was laborious, building robust quality control infrastructure was difficult, and developing labeling tools took time away from their core research expertise.

Anthropic evaluated several crowdsourcing and data labeling platforms, but found them lacking in large language model expertise, and it was difficult to extract the quality they needed.

After learning of Surge AI’s work with other key AI labs and large language model companies, Anthropic began leveraging the Surge AI LLM platform for their RLHF human feedback needs.

The Solution: Rich Human Feedback via Surge AI’s RLHF Platform

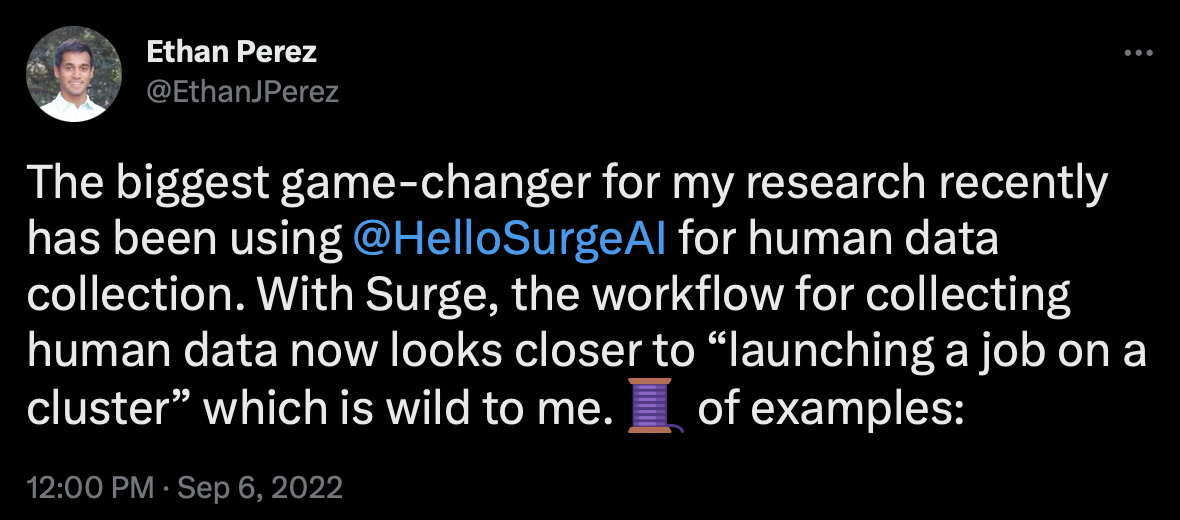

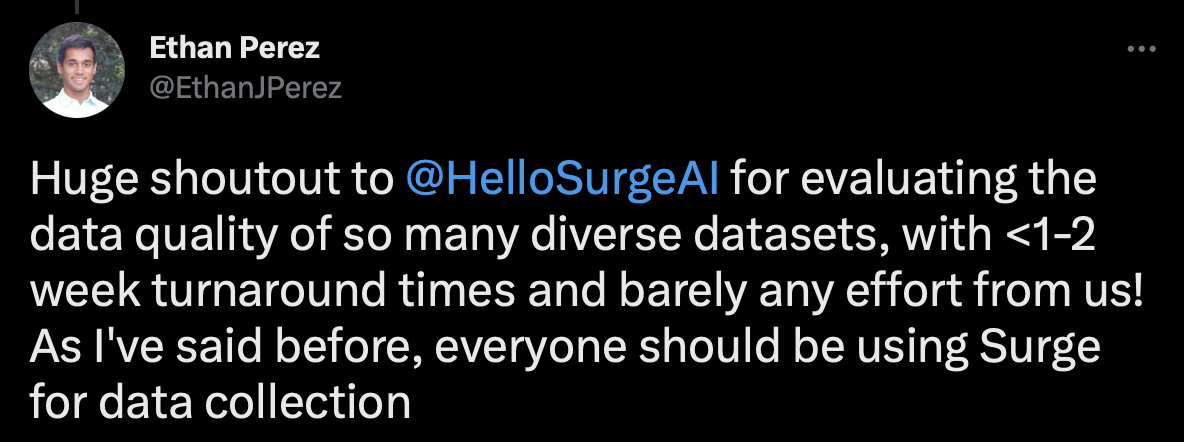

Anthropic has called Surge AI’s RLHF and human data a game changer for their research.

Some of the key features Anthropic leverages include:

- Proprietary quality control technology. Large language models are remarkably sensitive to the low-quality data typified by other data labeling companies — which often sets their work back by years. Our advanced human/AI algorithms and technology were built by our team of scientists and researchers, who’ve worked on this problem for decades.

- Domain expert labelers. The Surge AI platform was designed for the next generation of AI. As language models become increasingly advanced, they need increasingly sophisticated human feedback to teach them — whether it’s learning to solve mathematical problems (see our collaborations with OpenAI), learning to code, or learning to converse like an expert in a variety of domains like law, medicine, business, and STEM subjects. Our domain expert labeling teams provide the breadth of deep skills Anthropic needs to teach LLMs the breadth of human language.

- Rapid experimentation interface. As researchers in a fast-evolving field, Anthropic’s scientists and engineers need to be able to design and launch new jobs quickly — without spending months writing long guidelines, or iterating 10 times in search of the quality they need. Our APIs and RLHF interfaces allow them to integrate their own tools and platforms for long-running jobs, while spinning up new jobs on the fly.

- Red teaming tools. In order to keep LLMs safe, Surgers red team Anthropic’s current safety defenses in order to uncover new holes to be patched.

- RLHF and Language Model expertise. Instead of constant recalibration to discover how to make things work, our deep experience in RLHF and language models ensures that Anthropic gets the high-quality data they need every time – based on proven methods we’ve uncovered from hundreds of Surge-internal experiments.

Results: Anthropic’s Claude – a Safe, Highly Capable, State-of-the-Art AI Assistant

RLHF is widely recognized as the key ingredient that separates LLMs like ChatGPT from the previous generation. And with their own creative takes on leveraging the rich human feedback that Surge AI enables, Anthropic has taken this advance one step further.

With our partnership, Anthropic has been able to build one of the safest, most advanced LLMs on the planet. And as they continue to push the boundaries of human feedback in novel ways, Surge AI has been thrilled to partner with them on their journey.