There's been a lot of discussion on Hacker News recently about the quality of Google Search, and whether it’s deteriorated.

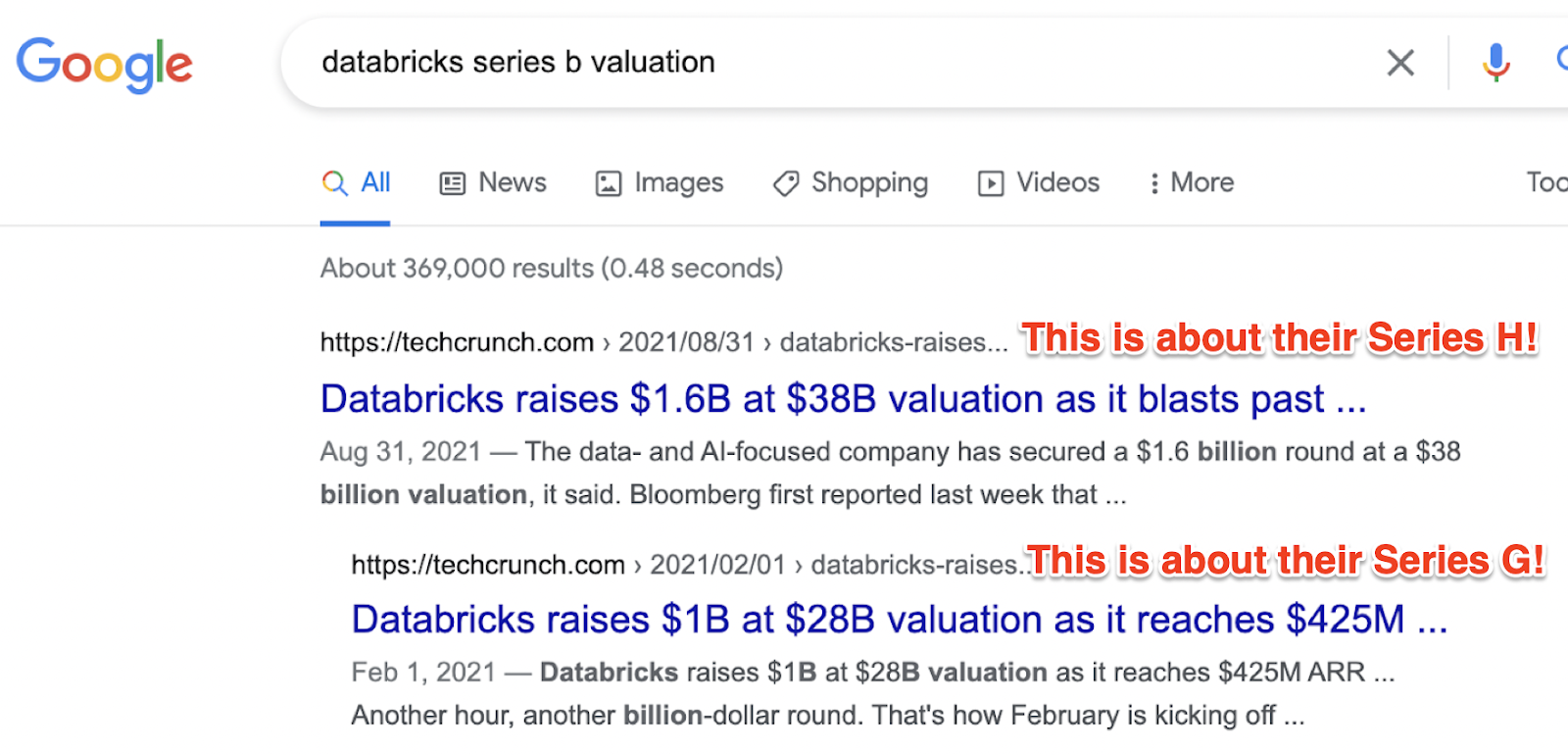

Anecdotally, I’ve noticed this myself. For example, a friend and I were chatting about Databricks last week, and we searched "databricks series b valuation" in order to figure out what their Series B valuation was. Unfortunately, Google doesn't understand what "series b" means (it seems to confuse "b" and "billions"), so the first search result is irrelevant. I don't even get any information about their Series B until below the fold!

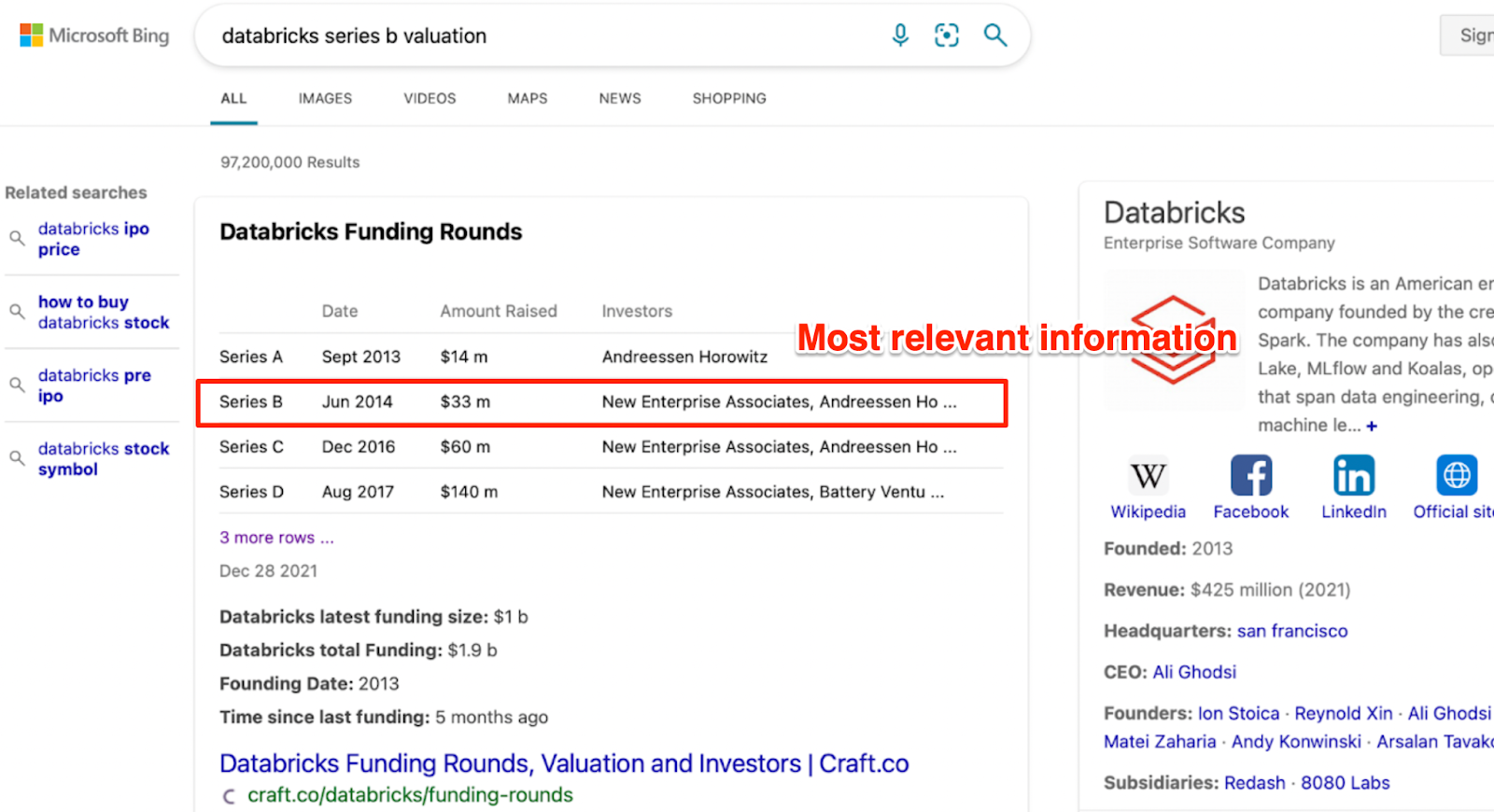

In contrast, Bing's search results page is much better. Information about the Series B is right in the expanded first search result (it doesn’t contain valuation information, but that’s expected because the Series B valuation isn’t public), and the right-hand sidebar is also quite helpful.

So why might Google Search be deteriorating? A couple plausible reasons:

- Google has been prioritizing short-term ad revenue over search quality. Interestingly, Google has a well-known paper explaining why focusing on the long-term is better for users and their business!

- Information is moving beyond traditional webpages. These days, content often lives on Twitter, Facebook, YouTube, Medium, Reddit, etc. The Internet today is very different from the Internet that Google Search was born in!

- Historically, Google Search contained little ML. From what I've heard, this has changed in recent years, due to changes in leadership and improvements in AI. Is it possible that ML is inadvertently making quality worse?

- Crucially, measuring search quality is a very difficult problem. Naively, for example, you might think that a better search algorithm leads to more clicks: when I search for "databricks series b valuation", you might think that I want to click on a website containing the information. But ideally I might never click on a website at all! The ideal SERP may be one that displays the valuation at the top of SERP itself. What’s more, clicking is often a bad sign: I might click on Google's first search result about the Series H, because I mistakenly think it contains information about the Series B too.

So is Google Search actually deteriorating? How good is it these days, and how does it compare to its competitors?

I used to work on Search Measurement at YouTube, Twitter, and Microsoft, and it's one of the major customer use cases Surge AI provides. So let’s play around and analyze just how good Google Search is in 2022!

Background: Human Evaluation

First of all, how do you even measure the quality of a search engine in a rigorous way? As mentioned above, it's very difficult to measure search quality using traditional metrics.

- Clicks aren't necessarily something you want to optimize for, for the reasons above.

- Neither is time spent searching: is a short session a good thing (perhaps you found your answer immediately) or a bad thing (the search results were so bad you quickly gave up)?

- Perhaps you can measure reformulations: if your initial search query failed, you may rewrite your query and try again, so an increase in reformulations could be viewed as a bad thing. But many people will give up instead of reformulating, and how do you tell whether a query is a reformulation anyways?

- Maybe long-term metrics are the solution. Happy Google Searchers will continue searching on Google. But running long A/B tests is painful if you want to quickly iterate, and even if you're unhappy with Google, is it likely that you'll switch to a competitor?

What's a search engine to do? One alternative that Google pioneered is the idea of human evaluation: in order to measure search quality, why don't you simply ask human raters how good your search results are? In other words, you give human raters a set of search queries and search results, and ask them to rate how well each search result satisfies the intent behind the query.

There are many nuances to this approach. For example: how do raters know the intent behind the query?, do you rate search results individually or the SERP as a whole?, where do you get these raters? But overall, it's my favored approach as well.

Example: Google Search Quality

So in order to measure the quality of Google Search, here was my process:

- I leveraged a set of human raters from Surge AI. (We’re a new kind of data labeling platform with high-skill human raters, built with quality as our top focus — whether you need savvy social media users tuned into US politics to help clean up the Internet, computer science graduates to train AI to answer how neural networks work (many even from Ivy League schools!), high school teachers to train educational question-answering AI systems for students, or Fortnite players versed in platform jargon to build gaming NLP.)

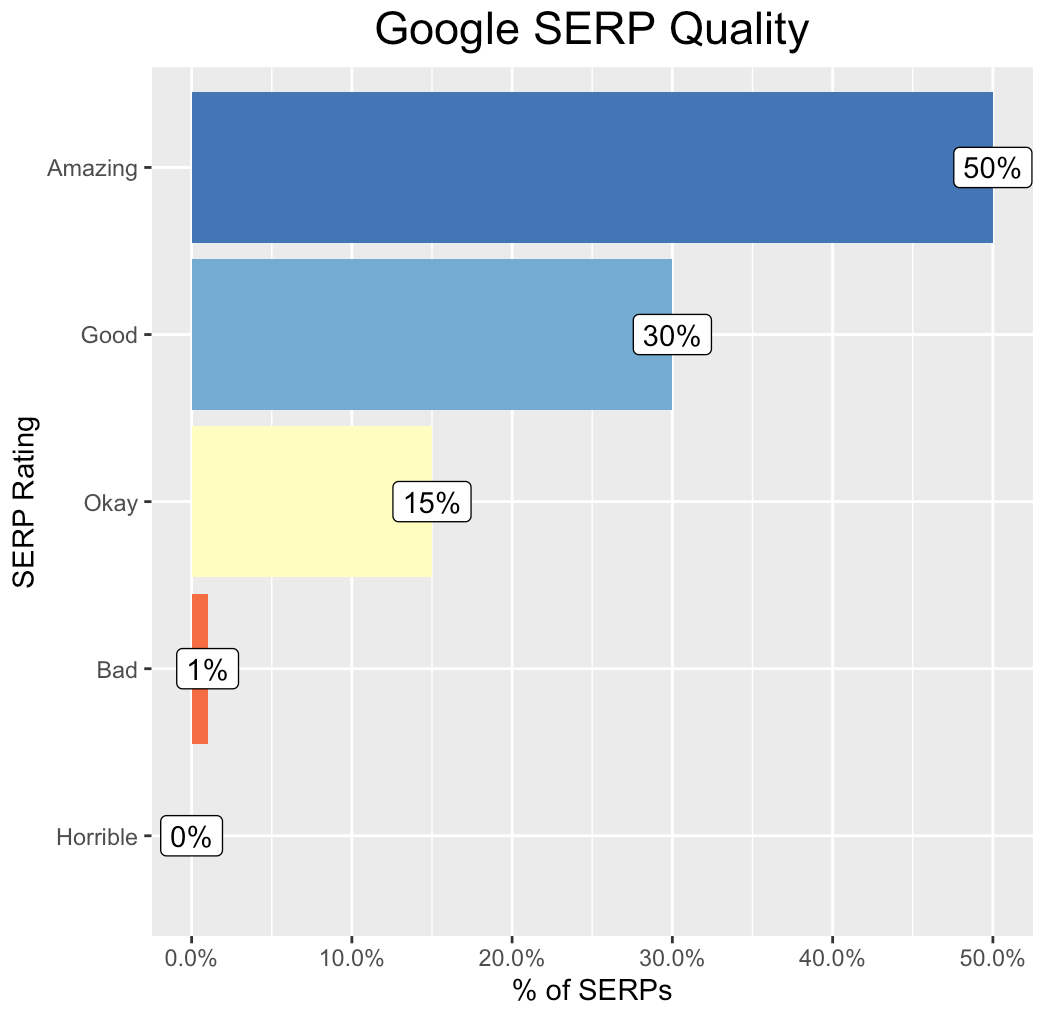

- In order to get search queries to be rated, I asked 250 raters to look up a recent search in their browser history, and to use that as the search query to rate. This is an example of a personalized search evaluation (as opposed to one where you sample random search queries and raters guess the intent behind each one). So these are actual queries representative of the usage patterns of the broad US Internet population.

- Each rater then explained their original intent, rated how well the Google SERP satisfied that intent on a 1-5 scale, and explained their judgment.

Here are the results:

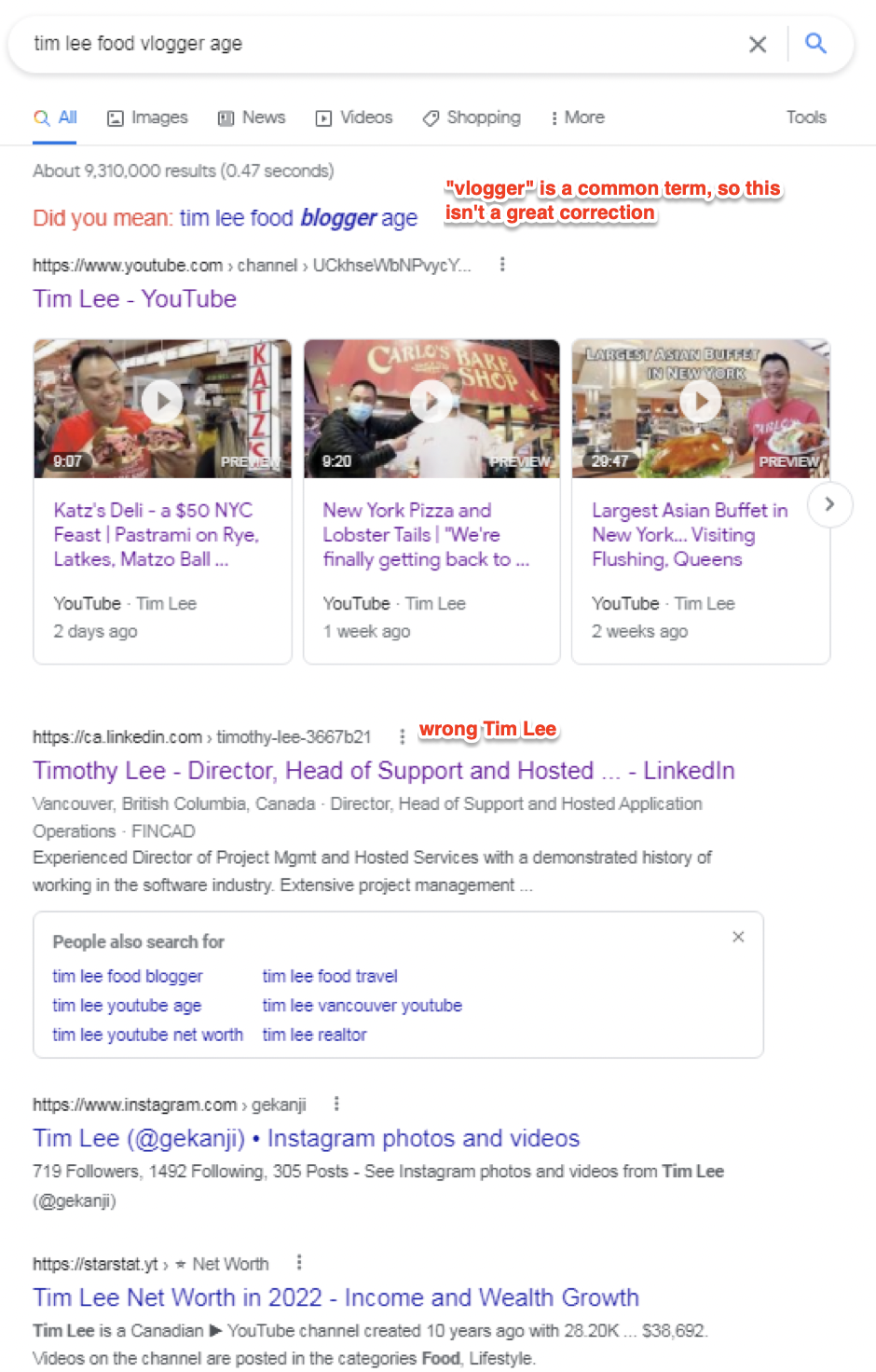

Here’s an examples of a search result Google performed poorly on.

Example

Search Query: tim lee vlogger age

Intent: I wanted to know how old the YouTube vlogger Tim Lee is

Rating: Bad

Rater Explanation: Only some of the results were about the right person, and I couldn’t find his age from the results at all.

(UPDATE: thanks to helpful readers who pointed out that this Wikipedia page is not in fact the Tim Lee mentioned above! Google Search: +1, Editor: 0)

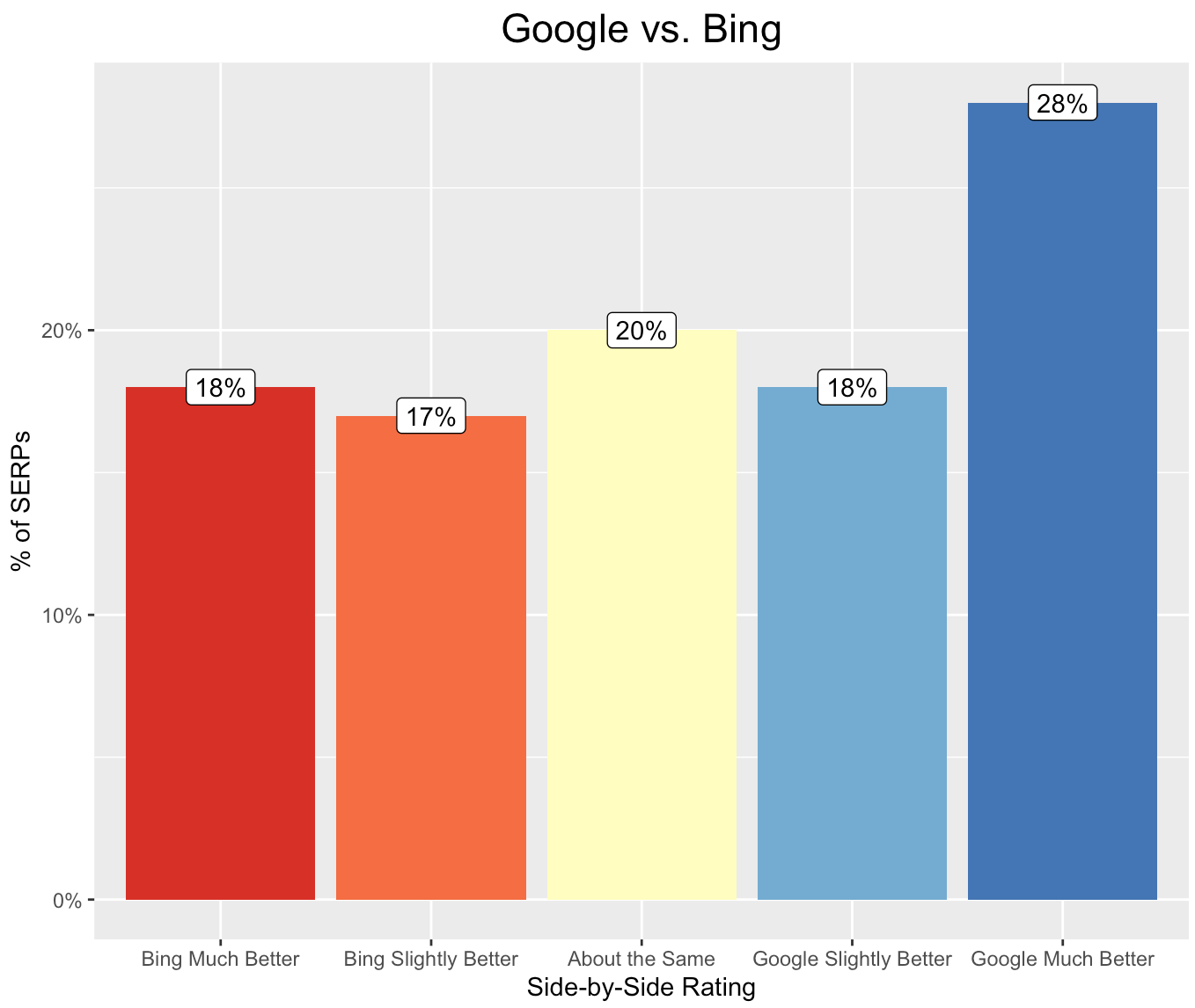

Example: Google vs. Bing

In addition to asking raters to rate the Google SERP, I also asked them to compare it against Bing. This is a side-by-side eval: rather than making absolute judgments (how good is Google's SERP? how good is Bing's SERP?), sometimes it's easier to compare them (which SERP is better?).

Here are the results:

So Google does outperform Bing (the difference is statistically significant), although it's interesting to see the places where Google returns worse results. For example:

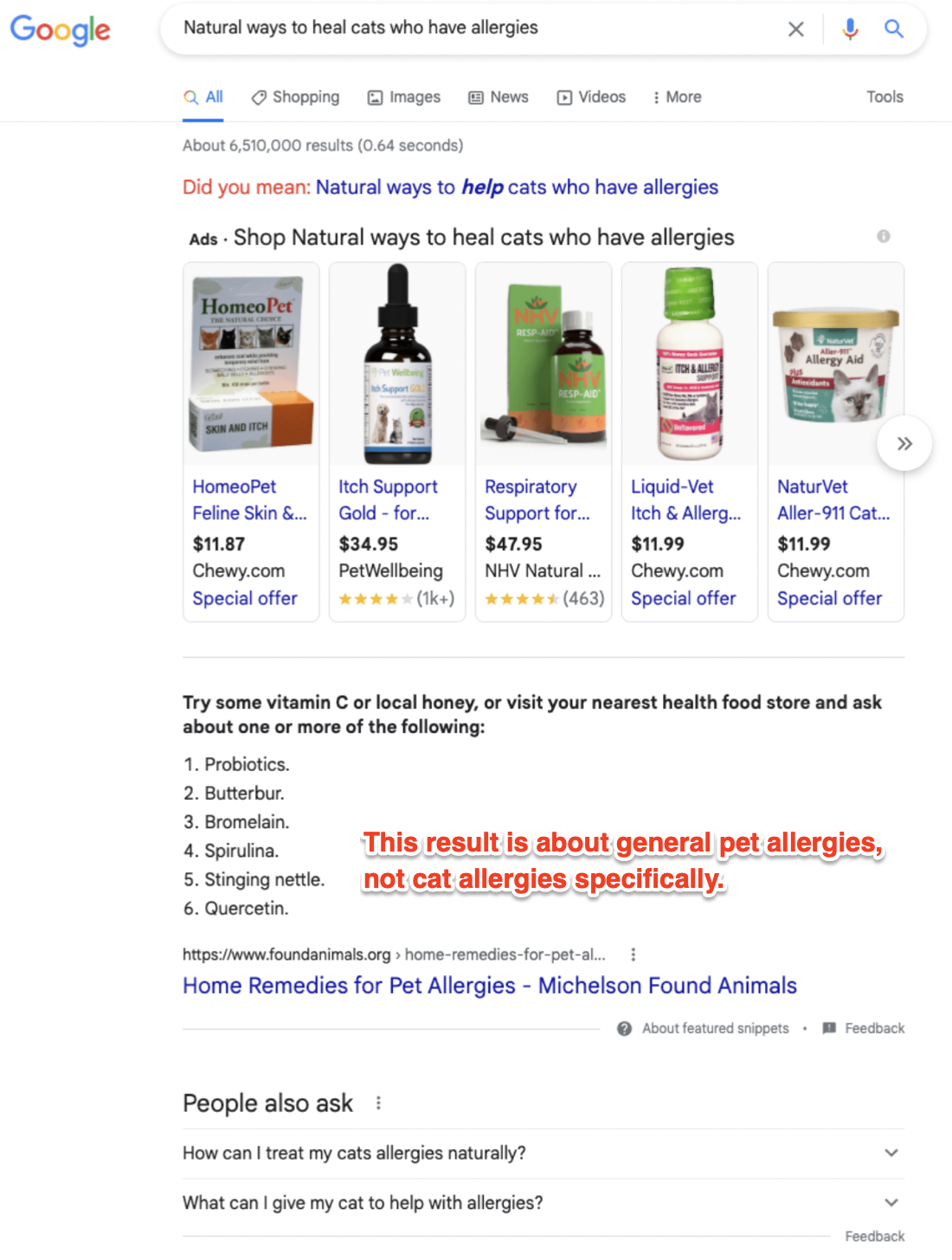

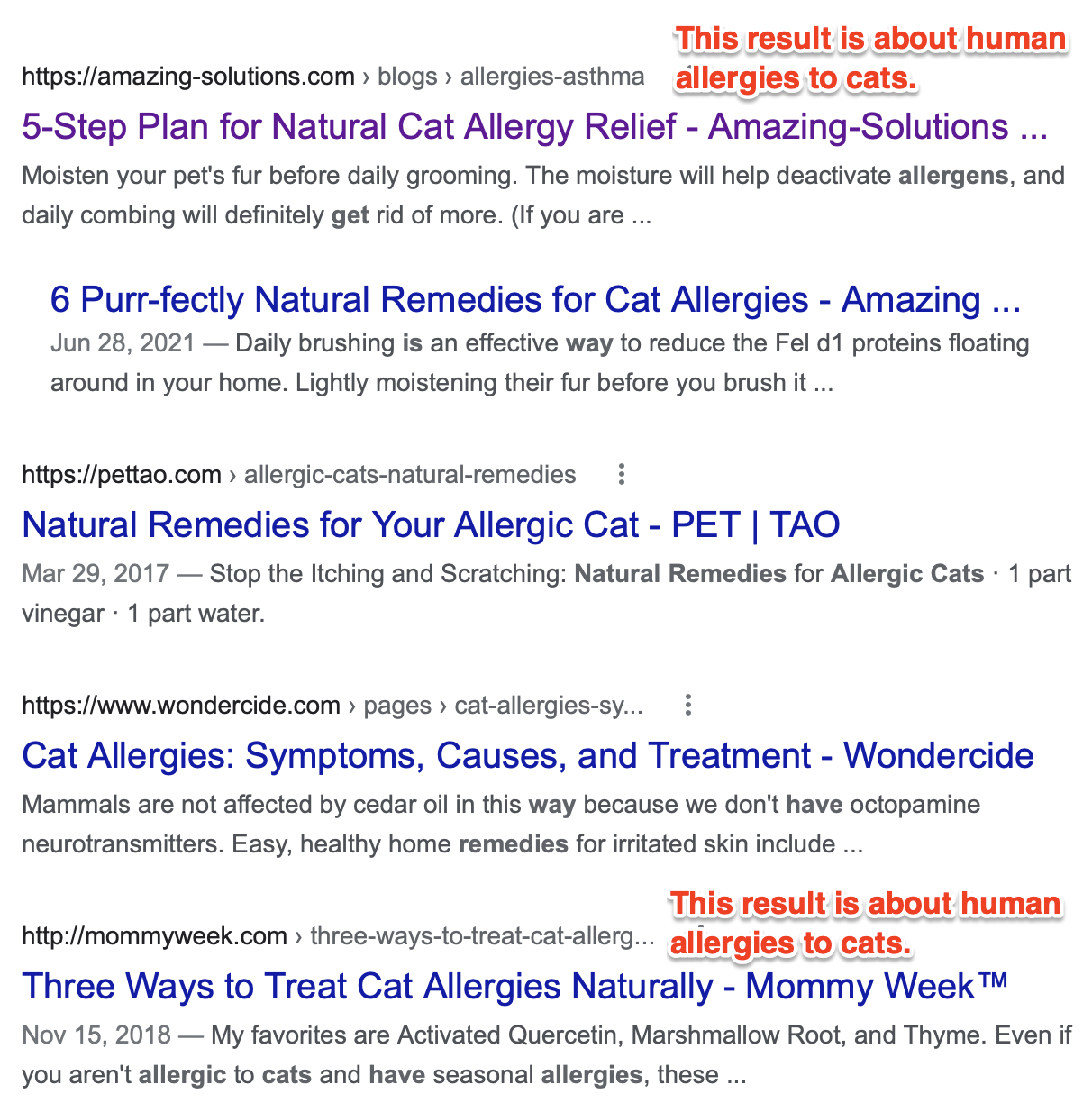

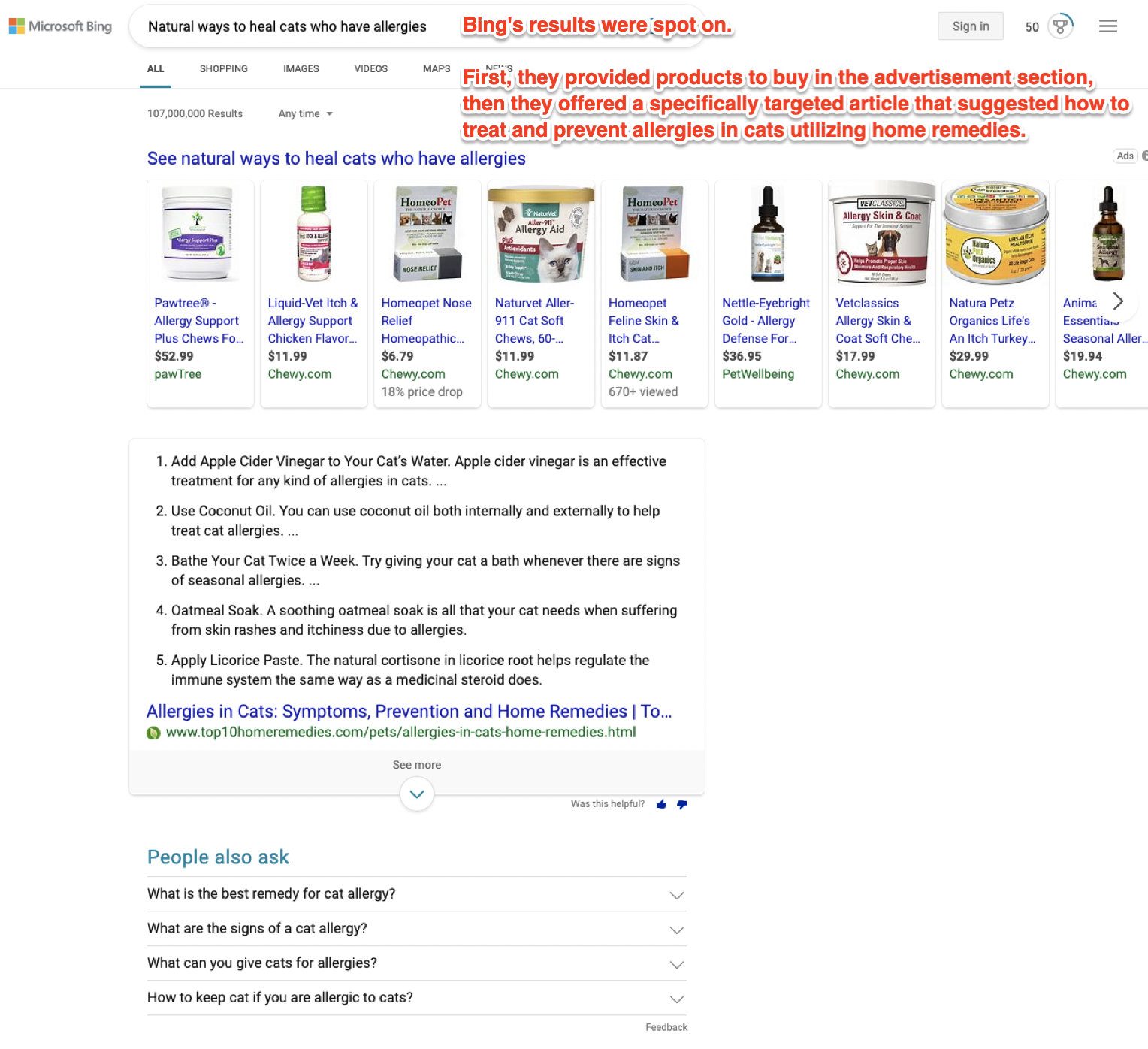

Example #1

Search Query: Natural ways to heal cats who have allergies

Intent: My cat is suffering from a stuffy nose, and I wanted to find out if there natural remedies or any products that I could buy to cure it.

Google SERP

Rating: Okay

Rater Explanation: The Google results page was not focused on my topic. The results were confused in that half of the content pertained to allergies in cats, and the rest were for general pet allergies or allergies to cats in humans.

Bing SERP

Rating: Amazing

Rater Explanation: Bing's results were spot on. First, they provided products to buy in the advertisement section, then they offered a specifically targeted article that suggested how to treat and prevent allergies in cats utilizing home remedies.

Overall: Bing was Much Better. Bing was clearly the better search result because it answered the search in every possible way that a user may want with ads, articles, images, and how-tos. Google's page offered products to buy and some interesting articles, but their search results were based on a misunderstanding of the query.

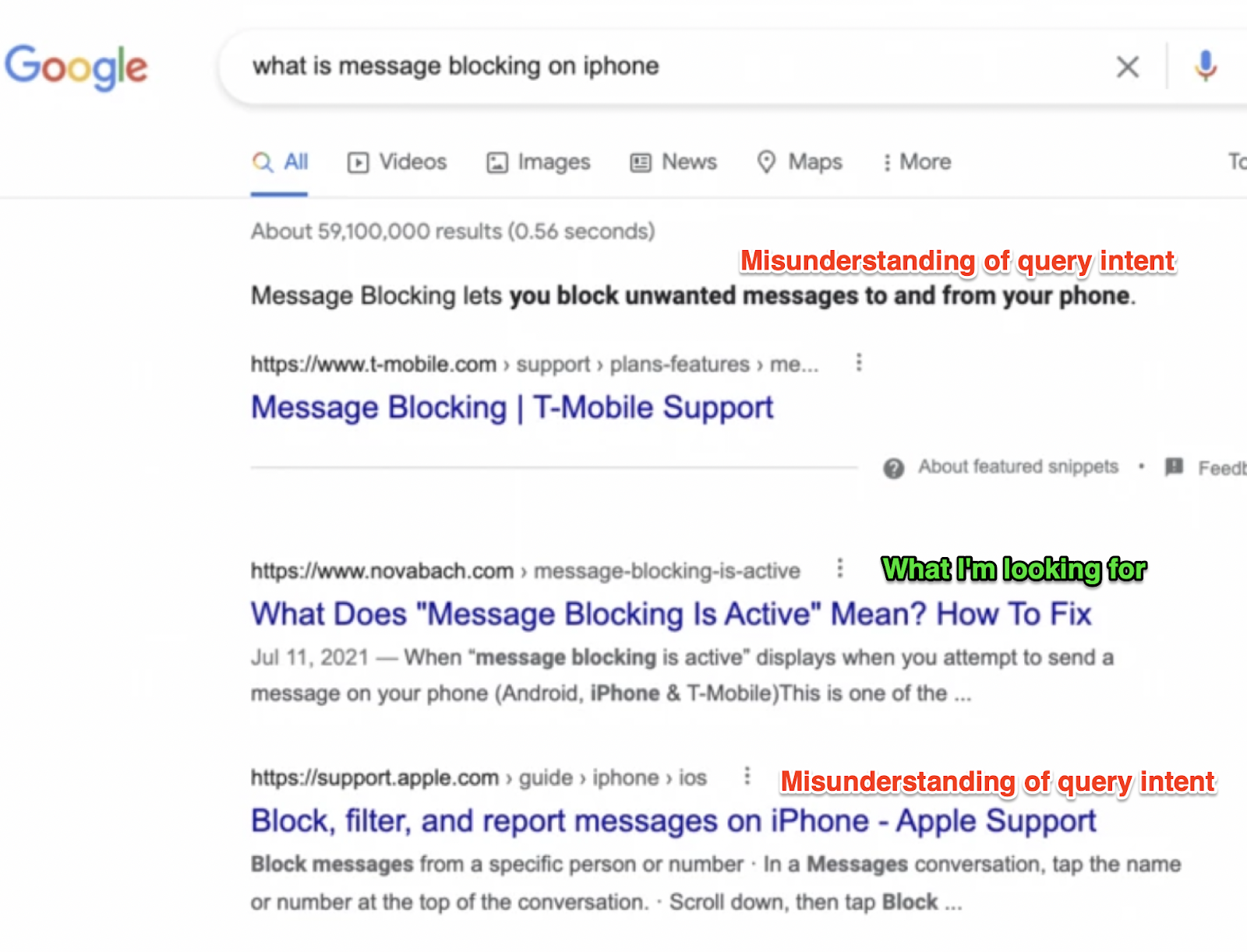

Example #2

Search Query: what is message blocking on iphone

Intent: I received a message on my phone after texting a relative with a line saying "message blocking." I wasn't fighting with that cousin and we're always in good standing, so I thought it was odd. I also thought it was odd because I've never heard of an automatic message telling you your message was blocked, usually companies try to make that unknown and discreet, so I looked it up

Google SERP

Rating: Okay

Rater Explanation: The first and third search results misunderstood my query and thought I was asking how to block other people. The second result, however, was helpful.

Bing SERP

Rating: Good

Explanation: The website search results all understood what I was looking for, and were related to the message I received.

Overall: Bing was Much Better. Google really didn't understand the question I was asking and gave me unhelpful answers. Bing understood that I was questioning why I was getting that message.

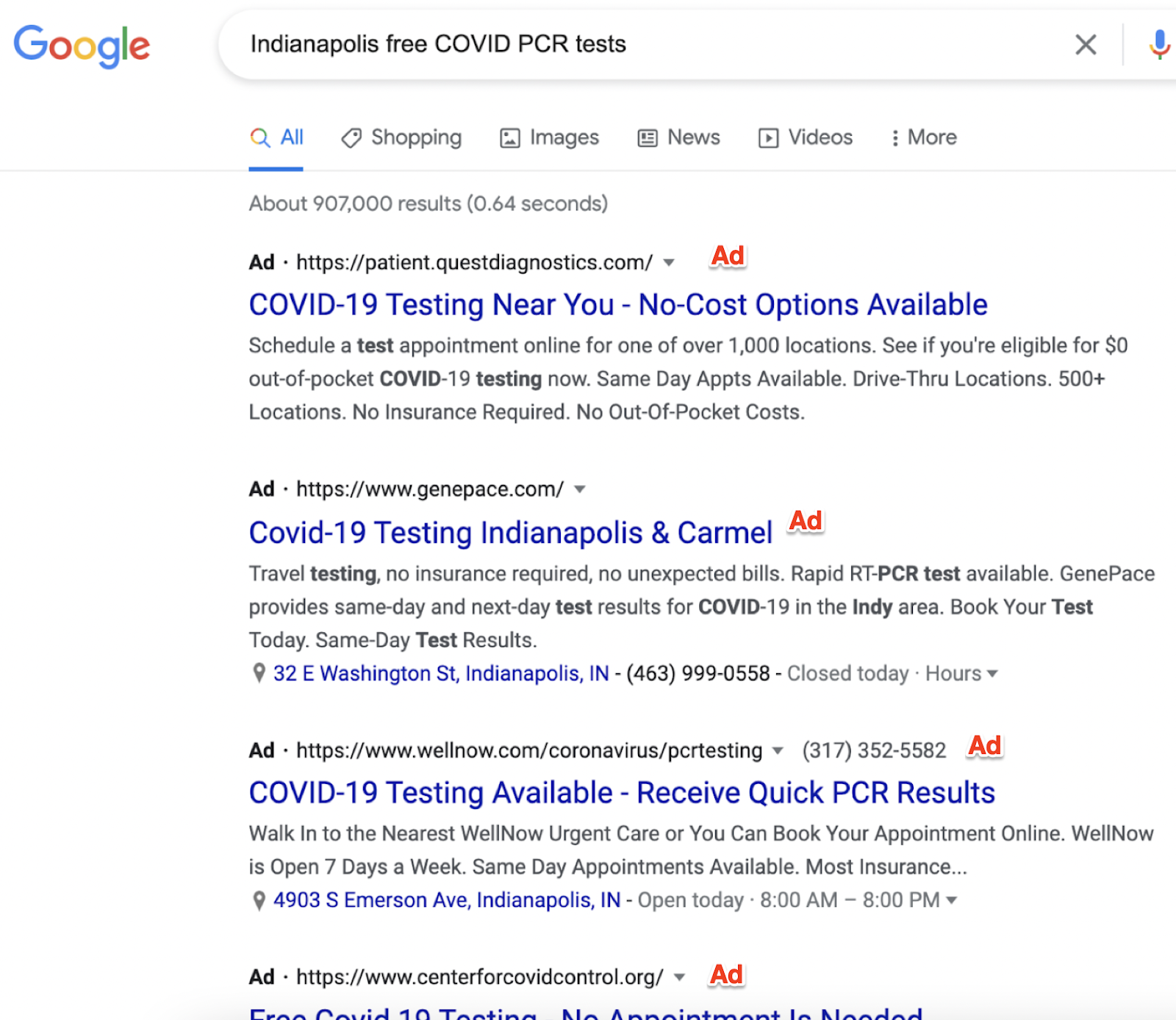

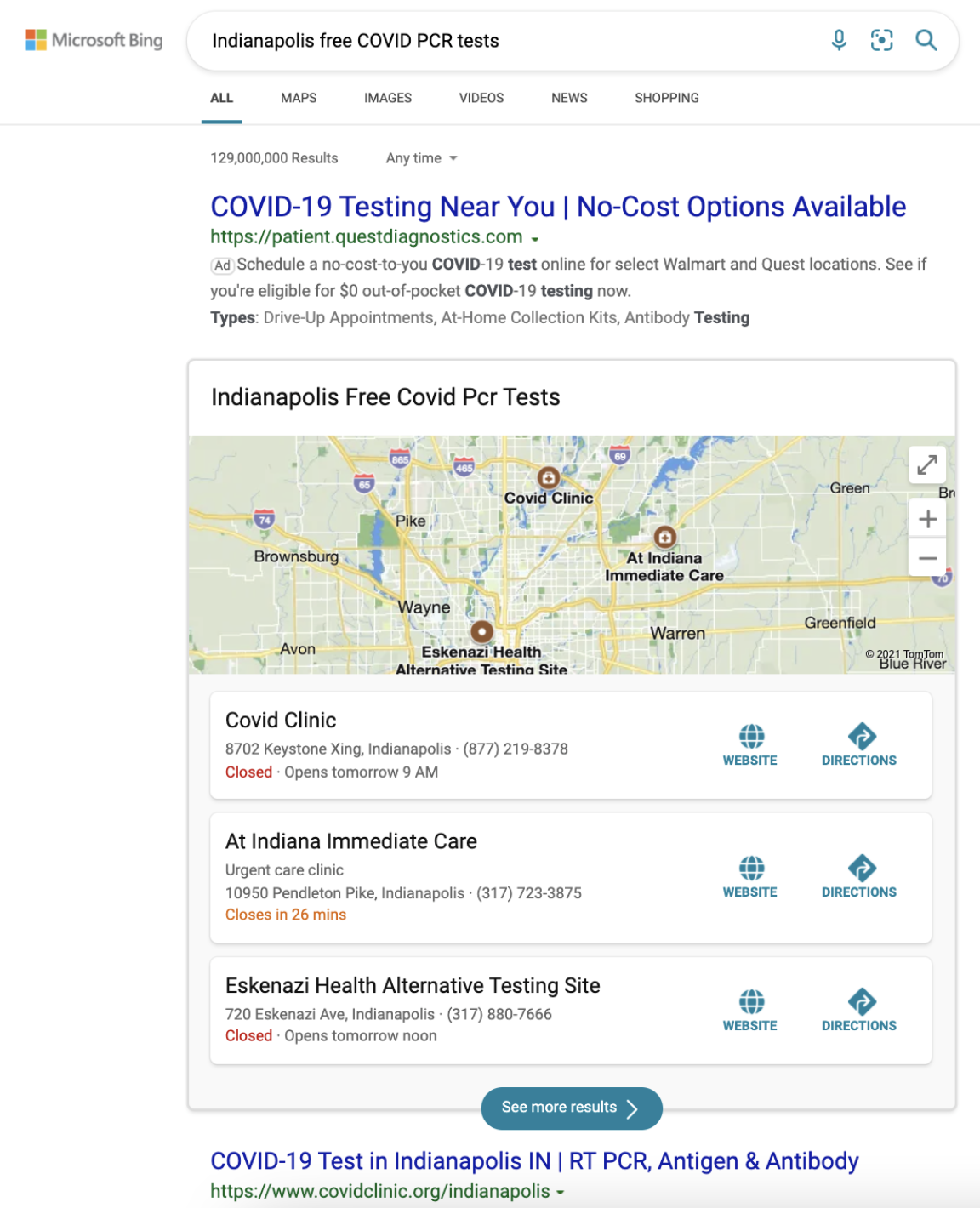

Example #3

Search Query: Indianapolis free COVID PCR tests

Intent: I was attempting to find free PCR tests where I live in Indianapolis. Ideally, a listing or map of all resources in the area would be desirable.

Google SERP

Rating: Okay

Rater Explanation: I would have liked to have seen the ISDH result sooner than I did in the search results. Or at least a map sooner in the search results of available testing options in the city. Instead, I got a page full of ads.

Bing SERP

Rating: Good

Rater Explanation: The second search result was a map with a listing of testing resources, their locations, and when they were open. This was helpful information to me!

Overall: Bing was Much Better. I thought I would have had to click on a link in the search results first before getting a map, but I got one as soon as I searched with Bing.

Beating Google

This human evaluation method can also be a useful way to find patterns of deficiencies in Google Search. For example, I hate searching for recipes on Google, since the results favor Pinterest-style blog posts with endless narrative and ads before you get to the recipe itself. So what are other categories of deficiencies where competitors could arise?

We'll cover that next, as well as a comparison of Google vs. DuckDuckGo!