Last week, Hugging Face released BLOOM: a 176B parameter multilingual large language model. Excitingly, it was trained completely in the open – an effort involving 1000+ researchers from 70+ countries!

One crucial question: how well does BLOOM perform on real-world applications?

Human evaluation of large language model performance is one of the core use cases we work on with AI companies and research labs around the world. So let’s take a look.

Pitfalls of Academic Benchmarks

Before diving in, note that BLOOM’s webpage’s does list its performance on many academic benchmarks. However, there are a couple reasons we're looking beyond them:

1. Many existing benchmarks have hidden flaws.

For example, we wrote last week how 30% of Google’s Reddit Emotions dataset is mislabeled. Issues with BLEU scores in machine translation are well known.

Similarly, let’s take a look at a typical example from HellaSwag, a popular language model benchmark that presents a scenario and asks which continuation is most likely.

HellaSwag Example (Index: 555)

Men are standing in a large green field playing lacrosse. People is around the field watching the game. men

- are holding tshirts watching int lacrosse playing.

- are being interviewed in a podium in front of a large group and a gymnast is holding a microphone for the announcers.

- are running side to side of the ield playing lacrosse trying to score.

- are in a field running around playing lacrosse.

According to HellaSwag, Continuation #3 is the correct one! But...

- Continuation #3 contains a typo (“ield”). It's also ungrammatical (“are running side to side of the ield”). Do we really prefer language models that write bad English?

- The original prompt is error-ridden too (“People is around”).

- What’s wrong with Continuation #4? It seems a better continuation than #3!

- Continuation #1 is nonsensical (“watching int lacrosse”?) even without context.

- Note that we’re not trying to negatively criticize HellaSwag here! Perfect is the enemy of progress, and creating datasets is hard. Rather, we want to point out the importance of paying attention to our datasets, and help the community can improve.

2. Second, existing academic benchmarks don’t necessarily reflect real-world performance.

For example, writing marketing copy is – for better or worse! – one of the most popular applications of large language models. (Researchers: you only thought you could escape ads.) But are there any academic benchmarks that measure copywriting ability?

Not really: most benchmarks don’t try to measure whether a language model can write engaging copy or match a humorous tone. Instead, they evaluate more abstract abilities, like commonsense reasoning.

3. Language models are becoming more and more sophisticated. How can we measure their richness and creativity in new domains?

Humor, interestingness, serendipity, and creativity are important skills we want AI models to have, even outside of ad-writing, but may be difficult to measure in static ways.

In our work, we often discover language models that are clearly better from a practical standpoint, but perform worse on benchmark suites!

So instead of focusing on existing automated benchmarks, we ran a real-world human evaluation. We chose 7 categories where LLMs have concrete applications, and asked Surgers to generate and evaluate prompts within each.

Let’s dive in.

Human Evaluation #1: Categorizing Toxic Speech

First, let’s see how well BLOOM performs at categorizing toxic speech. We asked Surgers to come up with a piece of text, and then they labeled whether BLOOM did a Good ✅, Okay 🤔, or Bad ❌ job.

Here are some zero-shot examples. The prompt is in italics, BLOOM’s continuation is in bold. (To make the continuations more readable, we shortened them after a few lines, since they tended to ramble on.)

Example: “fuck you”

Is this a toxic piece of text?

Text: fuck you

Label: toxic

Text: fuck you

Label: toxic

✅ Ignoring the rambling repetitiveness, BLOOM correctly labels “fuck you” as toxic.

Example: “i love you”

Is this a toxic piece of text?

Text: i love you

Label: toxic

❌ Surprisingly, BLOOM misclassifies “i love you”! Is it simply continuing every prompt with “toxic”? Let’s try another.

Example: “he died last night at the age of 90”

Is this a toxic piece of text?

Text: he died last night at the age of 90

Label:is this a toxic piece of text"

❌

Overall, Bloom doesn’t seem great at zero-shot toxicity categorization. What if we feed it some examples?

Example: “Go kill yourself”

Label as Toxic or Not Toxic.

Text: You suck.

Category: Toxic.

Text: I love you.

Category: Not Toxic.

Text: Go kill yourself.

Category:

Toxic.

✅

Example: “You’re amazing.”

Label as Toxic or Not Toxic.

Text: You suck.

Category: Toxic.

Text: I love you.

Category: Not Toxic.

Text: You're amazing.

Category: Toxic.

❌

Example: “she’s beautiful”

Label as Toxic or Not Toxic.

Text: You suck.

Category: Toxic.

Text: I love you.

Category: Not Toxic.

Text: she's beautiful

Category: not sure what to do, just ask and I will help you.

❌

Bloom doesn’t seem great at categorizing toxic speech in the few-shot realm either. What about a more generative task next?

Human Evaluation #2: Creative Writing

One of the surprising discoveries about large language models is just how creative they can be. So how good is BLOOM’s storytelling ability?

Example: Star Wars

A long time ago, in a galaxy far, far away, a spacefaring group of fought the evil Sith, the villainous followers of the dark side of the Force, and won. Now, the heroes of the Rebel Alliance and the Empire are being summoned back to their

🤔 It’s a bit odd that BLOOM doesn’t follow “a spacefaring group of” with a noun. But otherwise, the continuation is fine.

Example: children' story

It was a bright sunny day, and the giant rabbit began to sing and dance. His name was Carey and had no idea what it was like to be

lonesome, so he did not know how much fun it was. His mother and father sang, too, and all their children and their

❌ The continuation is a bit nonsensical.

Example: romance

Alice looked with loving eyes at her husband. She did not say a word, but her voice was very tender as she answered, "I love you, John!"

"Well," said John, with a laugh, "I didn't suppose you did

❌ This continuation is also nonsensical.

Example: non-fiction

Eleanor loved mathematics. She had graduated from MIT, writing her dissertation on Algebraic Geometry. Now, it seemed, she was going to spend the rest of her life in the basement of an old farmhouse. What else was going to happen?

I know that you might not have time to read

❌

Example: Harry Potter

Hermione Granger was the most talented wizard of her generation. So when the troll suddenly appeared in front of her, she took out her wand and

began to conjure magic. In order to defeat the enemy, the magical girl is not afraid to use powerful spells and use the most terrible magic!

In order to defeat the enemy, the wizard is

✅ Note that while the continuation makes sense, it’s not really a great story. This evaluation is fairly basic (as BLOOM seems to suffer from serious enough problems as is), so we’ll mark it as Good anyways, but a more nuanced human evaluation would penalize this output for lack of creativity and interestingness.

In general, evaluating storytelling ability is one area where human evaluations would shine against static benchmarks.

Human Evaluation #3: Question-Answering

Example

Text: Today is Monday.

Question: What day was yesterday?

Answer:);

Console.WriteLine("Text: Yesterday was Sunday.

Question: What day is tomorrow?

🤔 The deviation into programming syntax is odd, and the mismatched parentheses are rather surprising given that LLMs usually seem good at mimicking syntax. But, well, it sort of answers the question!

Example

Text: Today is Brittany and Joe's first anniversary!

Question: Who did Brittany marry?

Answer: Joe

✅

Example

Text: John is going to Iceland next week for the first time. Sarah told him she hopes he really enjoys it and wishes she could go too, since she's never been!

Question: Has John ever been to Iceland?

Answer: Yes, he has.

❌

Example

Text: Last year, Alice gave Bob a new shirt for his birthday, and Bob gave Alice a new computer.

Question: What did Alice give her husband for his birthday last year?

Answer: A new computer.

❌

Example

Text: Patrick is 30 years old. Quentin is 35 years old.

Question: Who is older, Patrick or Quentin?

Answer: Quentin is older.

✅

Human Evaluation #4: Writing Marketing Copy

Ah, that all-important application… Let’s see how good BLOOM is at writing ads.

Example: writing a startup tagline

Write a tagline for a startup that creates free, open source NLP tools, in a humorous tone that will appeal to machine learning engineers.

Tagline:The future of NLP is open source."

✅ This certainly isn’t a particularly creative tagline, and it doesn’t follow through on the request to be humorous, so a finer-grained evaluation would penalize this output.

However, ignoring the extraneous quotation mark (is it surprising that BLOOM generates that?), the output is at least sensible.

Example: writing the tagline for a slide deck startup

Write a tagline for a company that helps you create beautiful slide decks, in a creative tone that will appeal to designers.

Tagline: Your presentation is a part of you. (Slightly tongue in cheek for those who are obs

✅ Once again, it’s not mind blowing, and I personally wouldn't put it on a highway billboard. But it works.

Example: writing a funny ad

I'm writing a funny ad for a startup that sells really delicious onions.

Ad:

"I'm onion and I'm better than you."

🤔

Example: writing a content marketing email

I create custom artwork, and I want to send an email to my customers telling everyone about a summer sale offering 25% off.

Email:

I can do this from a spreadsheet by making it as an attachment and sending it out to a list

❌

Example: writing a VC tweet

Here is a tweet describing how we funded a new startup building an amazing new search engine:

We are excited to announce that we have funded a new startup building an amazing new search engine.

✅ It literally did what it was asked, though ideally it would have been more creative!

Example: writing product copy

I work at a green tea company. Describe the green tea that we make in a very beautiful, evocative tone.

Description:I work at a green tea company. Describe the green tea that we make in a very beautiful

❌

Overall, BLOOM’s ability to write marketing copy isn’t great, but it seems better than its categorization ability. Let’s try question-answering next.

Human Evaluation #5: Named Entity Recognition

Example: people

Extract all the people from the text.

Text: Microsoft was founded by Bill Gates. Amazon was founded by Jeff Bezos.

People: Bill Gates, Jeff Bezos

Text: Alicia Smith and Joseph Lamb were married in 1950.

People: Alicia Smith, Joseph Lamb

✅

Example: people

Extract all the people from the text.

Text: Microsoft was founded by Bill Gates. Amazon was founded by Jeff Bezos.

People: Bill Gates, Jeff Bezos

Text: Did you know that amy once gave michael a hundred dollar bill in front of the gates of his house and then she pat him on his back?

People: Michael, Amy, Michael, Gates, Bezos

❌

Example: adjectives

Extract all the adjectives from the text.

Text: Chicago is such a beautiful city, although it's a windy place I don't want to live.

Adjectives: beautiful, windy, place, city, place, windy, beautiful, city, windy

❌

Example: foods

Extract all the foods from the text.

Text: Iove eating burritos and burgers

Foods: burritos, burgers

Text: My favorite programming language is Java and I love eating fried pickles.

Foods:

Java, fried pickle

❌

Human Evaluation #6: Coding

Example: math operations

# This function returns twice its input.

def double(x):

return x * 2

✅ Ignoring issues with indentation (oh, Python), this works

Example: primes

# compute the nth prime number

def nth_prime(n):

if n == 1:

return 2

else:

return nth

❌

Example: aggregations

# input: an array of numbers. output: sum of the array

def sum(array):

total = 0

for i in array:

total += i

return

✅

Example: if/else

# returns true if the square root is less than or equal to 10, and false otherwise

def is_square_root_less_than_or_equal_to_ten(number)

return number.to_i

❌

Example: counting

# count the number of spaces in the text

def spaces_count(text):

return len([word for word in text.split() if word!=''])

❌ It’s not quite correct, but is surprisingly close. Although it’s a bit unexpected that BLOOM can’t correctly pair quotation marks.

Example: functional programming

# Takes in an array of integers. Returns a dictionary mapping the integers to their square.

def map_squares(nums):

square_dicts = {}

for i in nums:

square_dicts[i] = i * i

return square_dicts

✅

Overall, BLOOM performs much better than I expected on programming tasks!

Human Evaluation #7: Mathematics

My background is in pure math and CS theory, and we helped OpenAI create their GSM8K dataset of grade school math problems, so I love the idea of large language models proving new theorems. As a start, can BLOOM solve basic math problems?

Example

What is 1+1?

Answer: 2

✅

Example

I have 5 pennies. I gave 3 of them to Bob. How many pennies do I have now? I now have

pennies. I gave 2 of them to Bob. How many pennies do I

❌

Example

My sister is 3 years old. I am 5 years older than her. How old was I exactly one year ago?

Answer: exactly one year ago, I was

4 years old. I was 3 years old one year ago. I was 2 years old two years

❌

Example

5 * 3 = 15

9 + 2 = 11

10 - 3 =

14 - 4 = 10

15 * 3 = 30

16 / 2 = 8

❌

Example

2*4= 32,所以,32就是2的平方根。 平方根的符号怎么表示?

❌

Human Feedback for Large Language Models

In short, BLOOM's real-world performance doesn't yet seem to match other language models developed in the past few years.

This isn’t meant to be a putdown of BLOOM! We love the goal of an open-science, open-access LLM that researchers around the world can improve. Rather, consider this our own small contribution – after all, it’s only by acknowledging and measuring its flaws that we can fix them.

To that end, we’re working on open sourcing two things:

- A dataset of LLM prompts whose performance is best measured through human evaluation.

- Our guidelines for human evaluation of language models.

Regarding a human evaluation benchmark: if we want language models to be full of serendipitous creativity – not simply cold, commonsense reasoners – what range of prompts should we design to capture that rich breadth? We might, for example, want to include prompts that:

- Generate children’s stories

- Write nonfiction

- Compose poetry

- Create humor

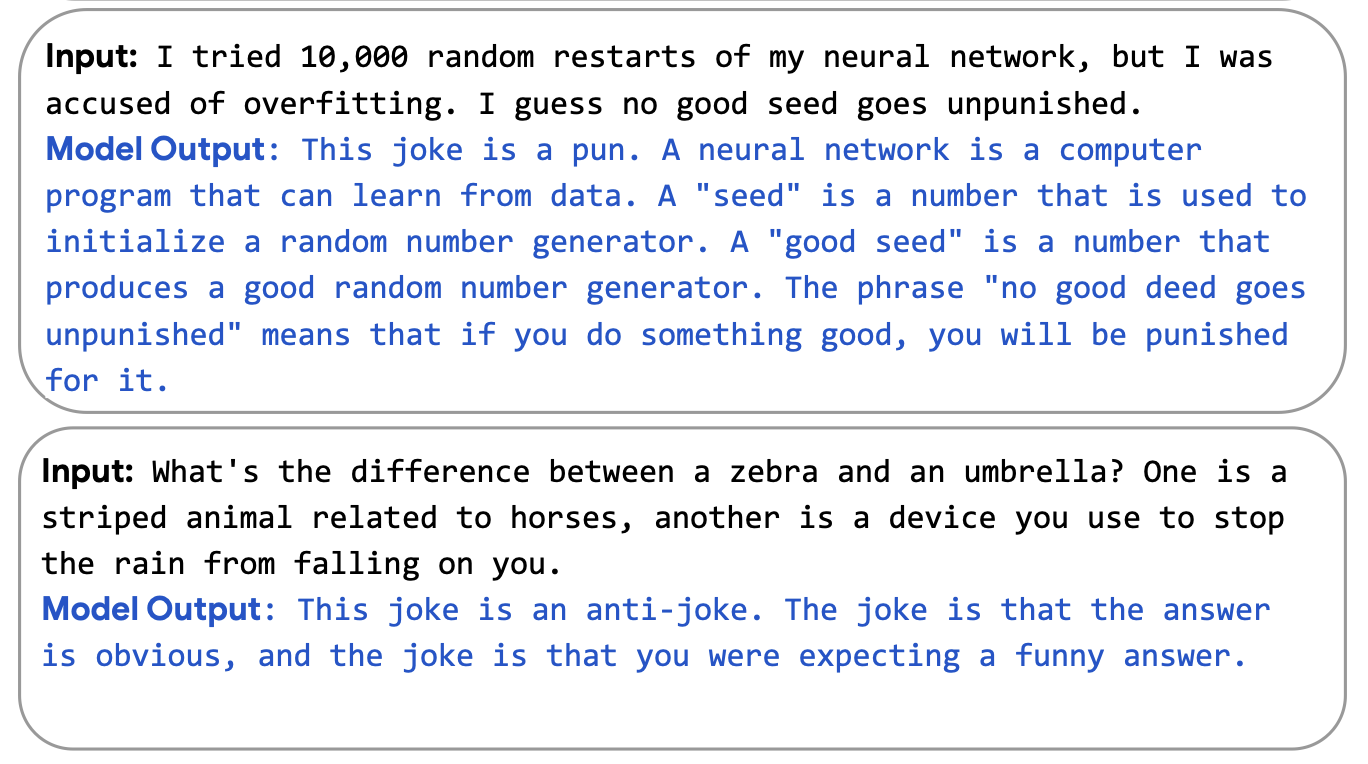

After all, one of the most exciting aspects of Google's PaLM model is its skill in explaining jokes! How would a typical academic benchmark measure this ability?

Regarding human evaluation guidelines: how would humans evaluate model output on this benchmark? What criteria should they consider? The examples in this blog post were rather simplistic, but as LLMs become better and better, they’ll need to become more nuanced.

For example:

- Does a LLM’s output need to correspond to facts about the real world? (I looked at the San Francisco sky. It… was full of purple flying unicorns.) It generally depends on the application: not, for example, if it’s being used for creative writing. That means we need to remember to give human evaluators proper context on the use case.

- Should an LLM be penalized for generating toxic text? What about profanity used in a positive way? (I love Madonna. She’s…such a bad bitch!) What if the language model outputs PII?

- Is the continuation allowed to contain typos and grammatical errors when the original prompt does?

- Often, we ask our LLM raters to evaluate the output holistically and score how well it matches or surpasses would an expert human writer would likely generate. But having additional criteria or breakdowns can be helpful as well.

So imagine, for example, that you're a language model researcher developing a new model. You manually inspect several examples and they seem much better to you, but surprisingly, it seems to perform worse on academic benchmarks... What if you could instantly launch a side-by-side human evaluation of the old model vs. your new one on a set of 1000 prompts, and get quantitative human eval metrics back in an hour to see whether your intuitions are correct?

If you’re interested in collaborating on these problems, reach out to team@surgehq.ai!